The deepfake photos ought to serve as a warning about how much worse things could get for our AI issue if businesses and regulators do nothing.

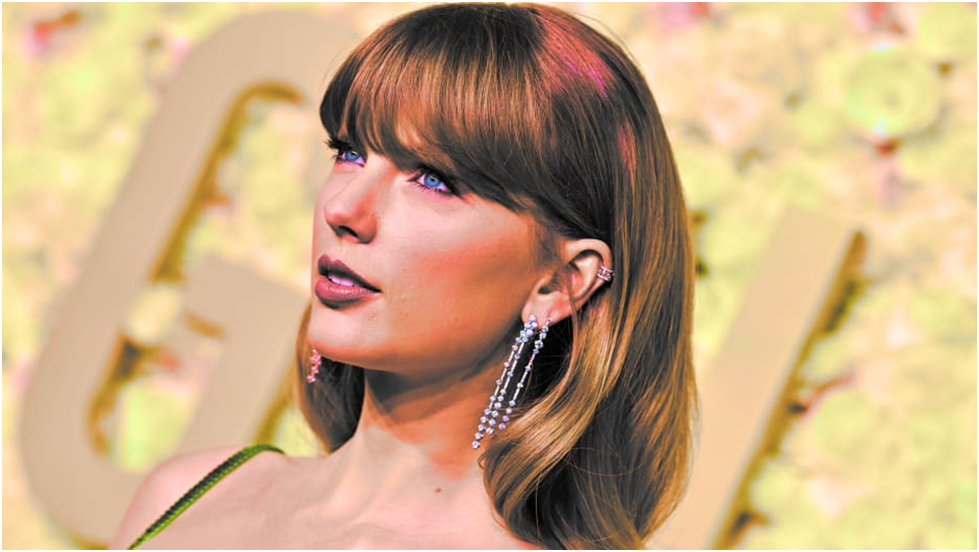

Latest News Updates : Record companies have been confronted by Taylor Swift as she has battled for control of her music. She is a Grammy Award winner and the leader of the first-ever billion-dollar tour in history.

However, her influence was undermined over night by deepfake pornography—the most crass and eerily familiar way a woman could be exposed.

Fast Company has decided not to display or provide a link to the explicit photographs, which feature a variety of AI-generated sex actions. The pictures were viewed more than 45 million times until the account that posted them went down. The account was active for over a day before it was blocked. The request for response from Fast Company was not answered by Swift’s officials.

It has long been practiced to degrade women by treating them as nothing more than sexual objects and by producing graphic representations of them. The New York Evening Graphic used composite photos more than a century ago to show sexualized pictures of women by superimposing some people’s heads over other people’s torsos. However, the combination of Swift’s instantaneous social media viral success and the unchecked growth of generative AI tools marks a low point and, should firms and regulators fail to take action, a warning about the future of sexual assault online.

In an effort to mitigate the harm, Swift’s supporters banded together and flooded social media search results pages with innocent images. However, the truth is that these artificial intelligence (AI)-generated images already exist and are visible to the public, which is heartbreaking for those who have been victimized by revenge porn and the emerging trend of deepfaked images.

According to Carolina Are, a platform governance researcher at the Centre for Digital Citizens at Northumbria University, the dispute brings to light a number of challenges. One is the way that society views women’s sexuality in general and the sharing of nonconsensual photos in particular—whether such images are taken from women or are artificial intelligence (AI) creations. She claims, “It just leads to this sense of helplessness.” It actually refers to being helpless in digital environments over one’s own body. It appears that Unfortunately, this will just become the norm.

Users have reposted the photographs on Reddit, Facebook, and Instagram. Platforms, notably X, where the images were first shared, have been attempting to stop the sharing of the images by suspending accounts that have posted the content. On X, where a request for comment was not immediately answered, the pictures are still being reshared.

Are notes, “What I’ve been noticing with all these worrying, AI-generated content is that that content seems to thrive when it’s nonconsensual, when it’s not people who actually want their content created, or who are sharing their body consensually and willingly to work.” “However, the same content is heavily moderated when it’s consensual.”

Swift is by no means the first individual to fall prey to non-consensual, artificial intelligence-generated imagery. (Previous reports from a number of media publications have revealed the existence of additional networks that facilitate the production of deepfake pornography; Fast Company does not maintain any connections to such services.) A controversy involving the creation of deepfake photographs of over 20 women and girls—the youngest of whom was just 11 years old—tears apart a community in Spain in October. Extortion of victims for money has also been accomplished through the deployment of deepfake pornography.

Though some generative AI tools may shift course, Swift is arguably the most well-known and most likely to have the resources to fight back against the tech platforms themselves.

A non-academic study of almost 100,000 deepfaked movies that were posted online the previous year revealed that 98% of the videos were pornographic and that women made up 99% of the subjects. 94% of the people in the videos worked in the entertainment sector. Three out of four respondents to a related survey of over 1,500 American men said they didn’t feel bad about seeing deepfake porn produced by AI. A third each claimed that since they knew it wasn’t the individual, it wasn’t damaging and that as long as it’s done just for their own benefit, nobody is harmed.

However, it is destructive, and people should feel bad about it: clauses in the U.K.’s Online Safety Act and a New York statute make it illegal to share nonconsensual sexual photographs that have been deepfaked, with a one-year jail penalty for doing so.

“The harm caused by deepfakes of this type, which are essentially sexual abuse and harassment, is clearly being fueled by a systemic and persistent disregard for bodily sovereignty,” says Seyi Akiwowo, CEO and founder of Glitch, a nonprofit organization that advocates for greater digital rights for underrepresented groups on the internet.

“Tech companies must act decisively to safeguard women and underprivileged groups against the possible harms of deepfakes,” asserts Akiwowo.